Football is more than just a sport; for marketers, it is a universal language. It crosses borders, demographics, and languages, offering brands a unique opportunity to engage with millions of passionate fans. However, launching a football game for a marketing campaign isn’t as simple as putting a digital ball on a screen.

Many brands fall into the trap of creating experiences that are either too “sales-heavy”—killing the fun immediately—or too focused on complex gameplay, which kills conversion rates. The secret to success lies in the architecture of the campaign: balancing psychology, design, and data capture.

While the tips in this article apply to any football season or local league, they are the exact strategies used to build successful World Cup Marketing Games that drive mass engagement. Whether you are targeting a local derby or a global tournament, the structural rules remain the same. Here is how to design a game that scores both goals and leads.

Kick-off: Balancing Fun vs. Promotional Goals

The “Golden Rule” of gamification is simple: The game must be fun first. If the gameplay is clunky or boring, no amount of prize money will keep a user engaged long enough to see your brand message.

To strike the right balance, treat your branding like the stadium environment rather than the referee.

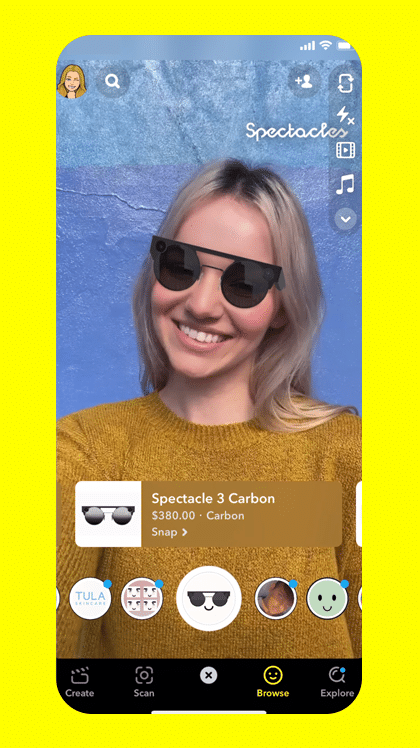

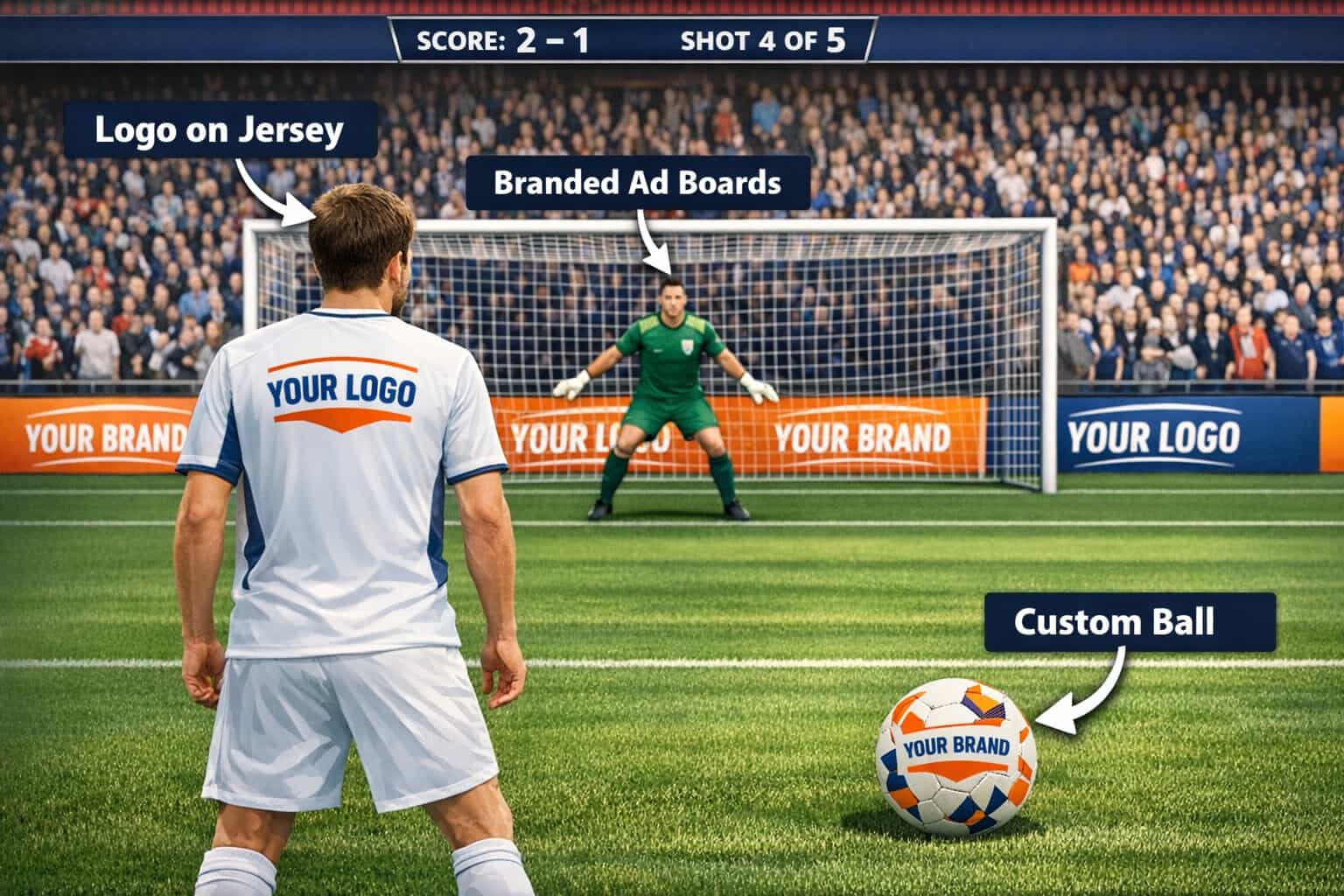

- Passive vs. Active Branding: Don’t interrupt the gameplay with pop-up ads. Instead, integrate your brand into the assets. Place your logo on the center circle, dress the goalkeeper in your brand colors, or use digital LED boards in the background of the game.

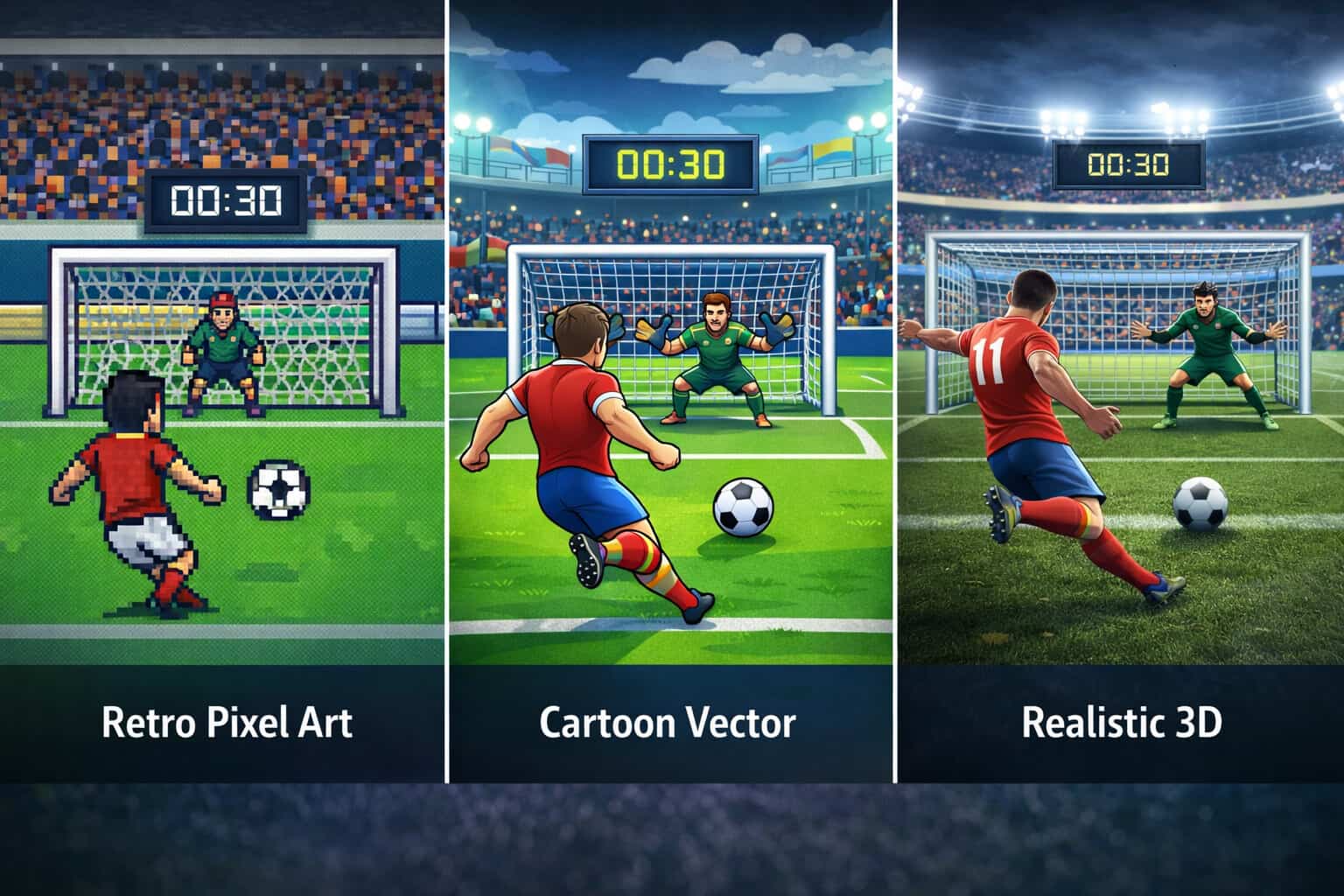

- Keep it Casual: For marketing campaigns, “Hyper-Casual” mechanics work best. You aren’t trying to build the next FIFA console game; you want a simple, addictive loop (like a penalty shootout or keepie-uppie challenge) that can be played with one thumb on a mobile device.

- The 80/20 Rule: Dedicate 80% of the screen real estate to the game and 20% to the UI/Brand messaging. This ensures the user feels they are playing a game, not watching an interactive commercial.

Mapping the User Journey: From Kick-off to Conversion

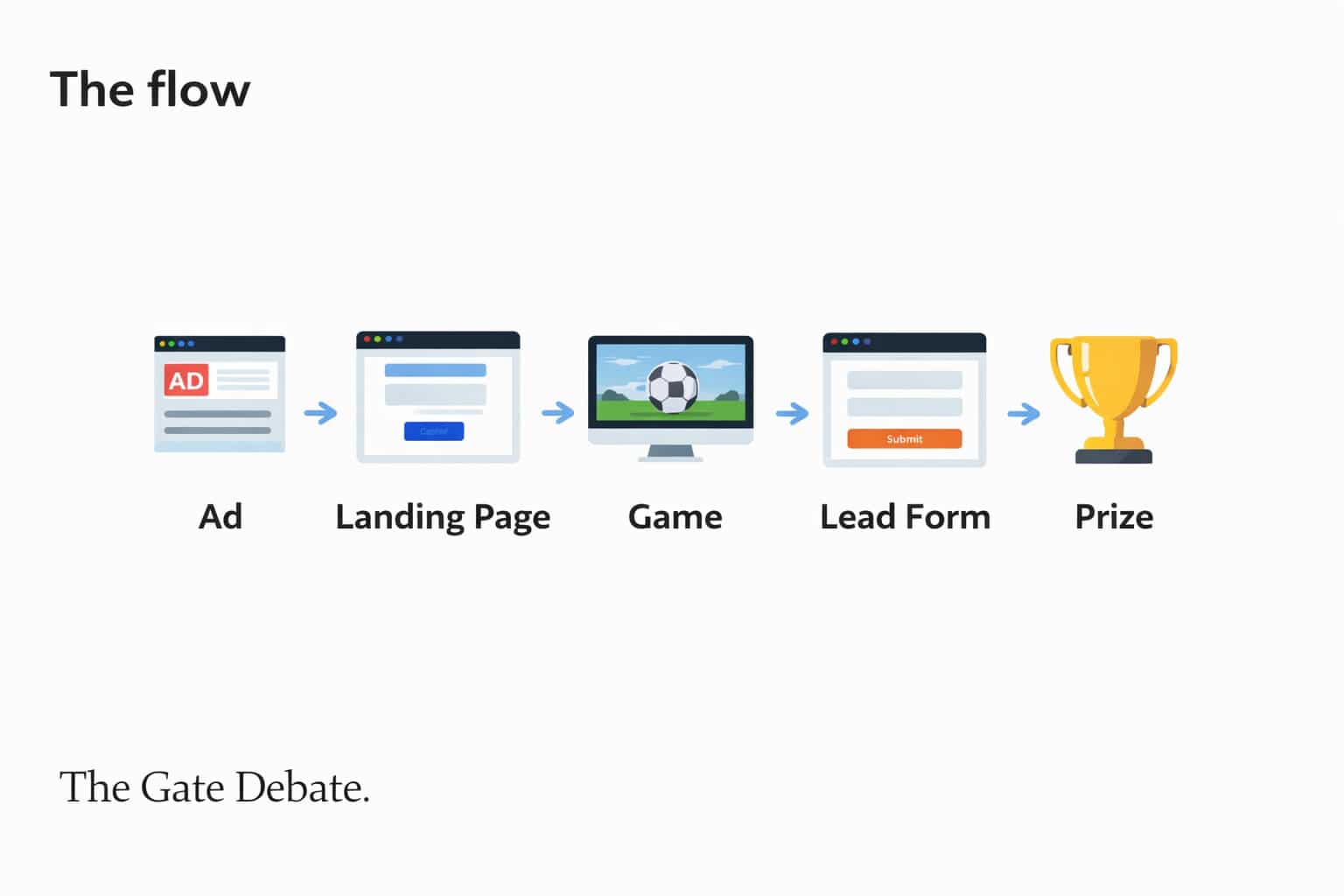

A pretty game is useless if it doesn’t have a clear path to conversion. You need to map the user journey to minimize friction. The ideal flow usually looks like this: Ad/Social Post → Landing Page → Tutorial → Gameplay → Result → Data Capture → Reward.

The most critical decision you will make is where to place the “Data Gate” (the lead form).

- The Pre-Game Gate: You ask for data before they play. This results in fewer players, but higher-quality leads, as only interested users will proceed.

- The Post-Game Gate (Recommended): You let them play first. Once they achieve a score, you ask for their details to “Save the Score” or “Reveal the Prize.” This leverages the psychological principle of reciprocity—you gave them entertainment, so they are more willing to give you their email.

The Prize Locker: Integrating Incentives Strategically

Incentives are the fuel that keeps the marketing engine running. However, you don’t always need a massive budget to get results. You just need to structure your rewards correctly.

Tiered Rewards: Use a mix of prizes to keep everyone interested.

Participation Rewards: Digital coupons or discount codes given to everyone just for playing. This drives immediate sales.

Performance Rewards: Higher value prizes for those who top the leaderboard.

Sweepstakes: One “Grand Prize” (e.g., a signed jersey or match tickets) that anyone can win via a lucky draw, regardless of skill level.

The “Near Miss” Psychology: Design your result screen to show users what they almost won. If a user scores 4/5 penalties and sees they missed a 20% discount by just one goal, they are highly likely to replay the game. Repeated exposure means better brand recall.

Viral Mechanics: Turning Players into Promoters

The most cost-effective traffic is the traffic you don’t pay for. Your game should be designed with viral loops that encourage users to bring in their network.

Share Scores, Not Brands: Users are hesitant to share a generic brand advertisement. However, they love to share their achievements. Ensure your social share buttons pre-populate text like: “I just scored 10 goals against the keeper! Can you beat me?”

The Challenge Mechanic: Create a direct “Challenge a Friend” button. This sends a specific link to a friend via WhatsApp or Messenger, inviting them to beat that specific score.

Incentivized Sharing: Offer an in-game advantage for sharing. For example, give the player an “extra life” or a “golden ball” if they share the campaign on their social media story.

Optimization: A/B Testing for Effectiveness

Launch day is just the beginning. To maximize ROI, you should be A/B testing different elements of your football game.

Test the “Hook”: Try different calls to action on your landing page. Does “Play to Win” convert better than “Test Your Football IQ”?

Test the Difficulty: This is crucial. If the goalkeeper is too good, users rage-quit. If he is too slow, users get bored. finding the “Flow State”—where the game is challenging but winnable—requires testing.

Test the Visuals: Sometimes, simply changing the color of the “Claim Prize” button or swapping the background stadium image can significantly increase click-through rates.

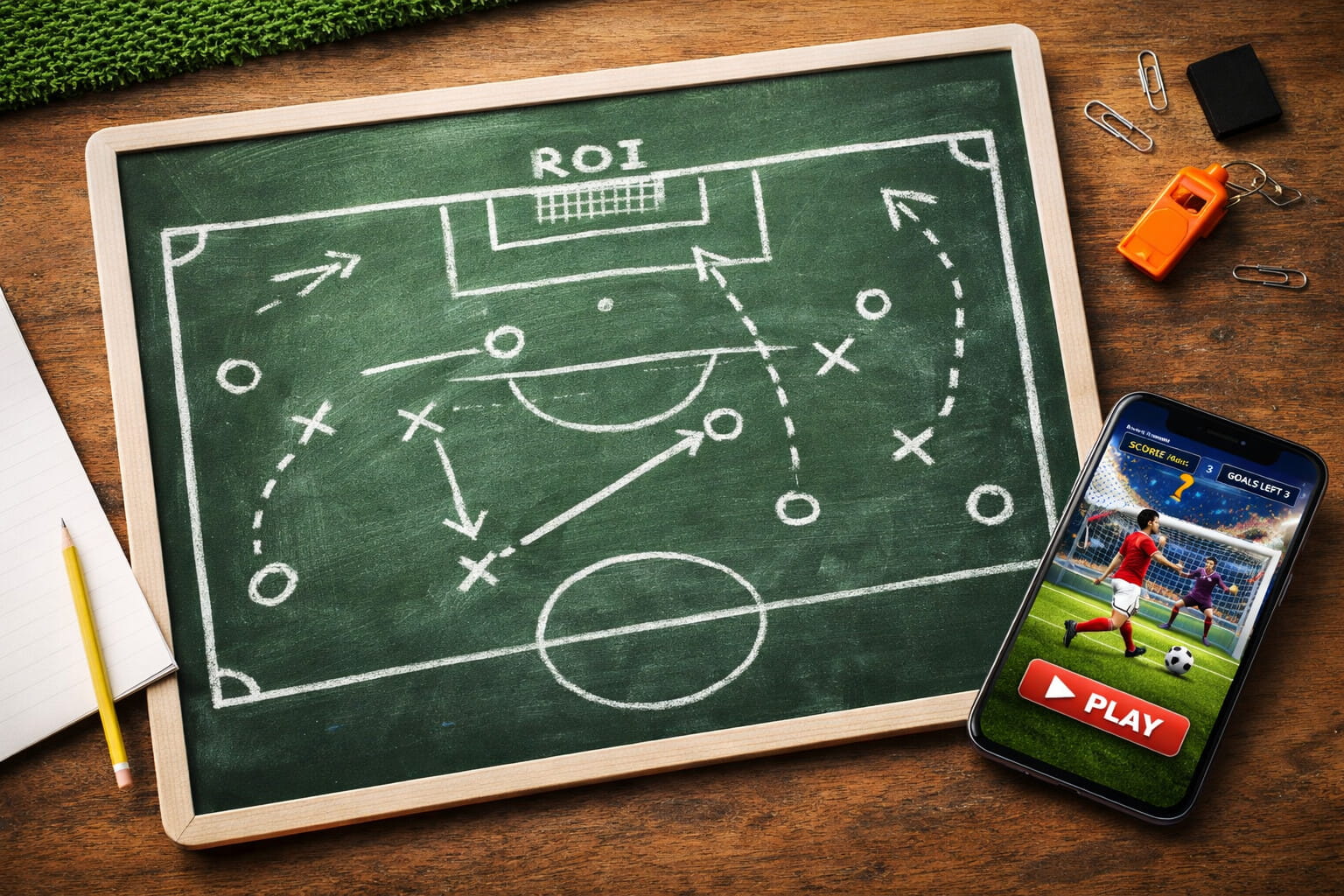

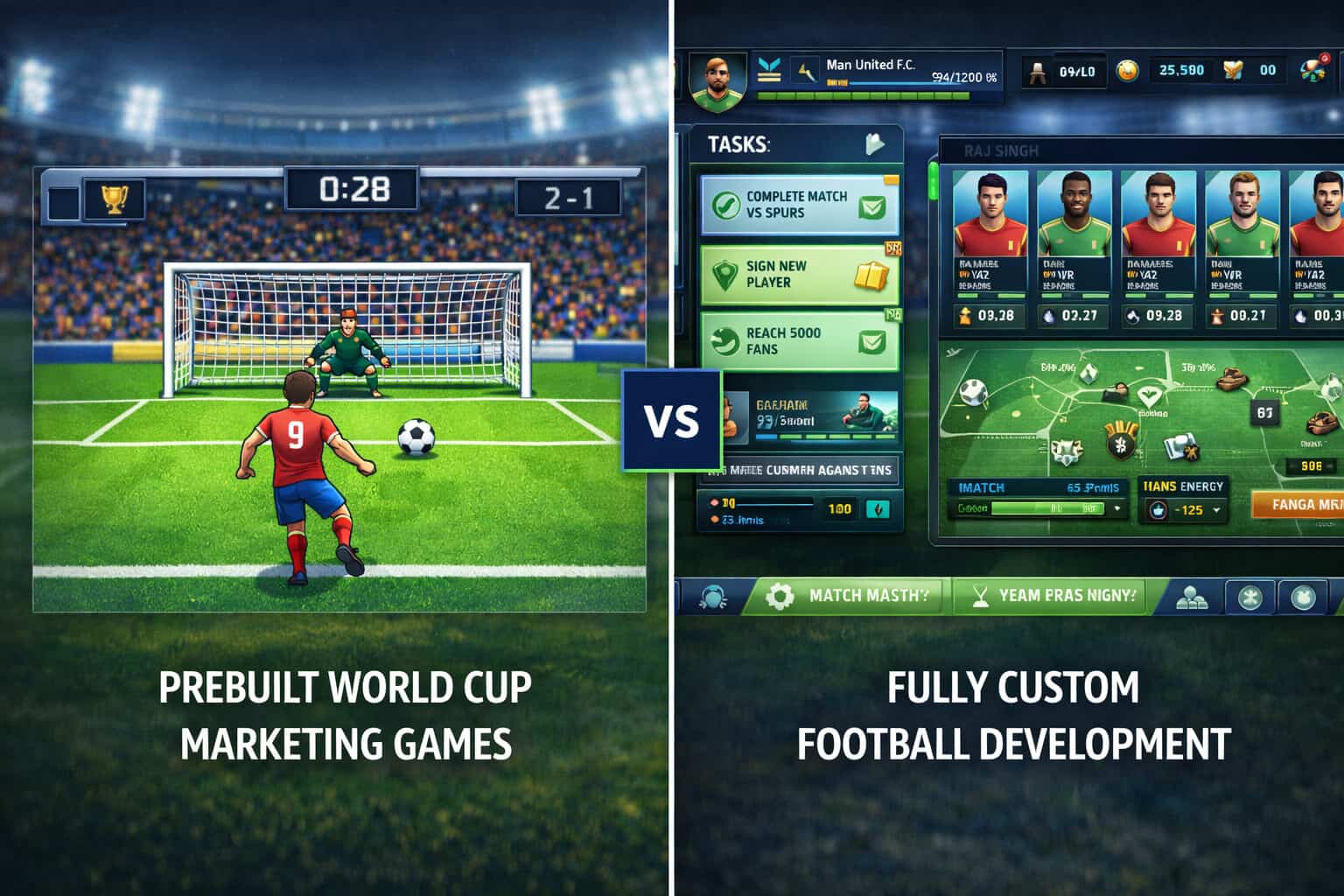

Speed to Market: Why You Don’t Need to Code from Scratch

Creating a custom game from scratch can take months of development and cost a fortune in bug testing. For a time-sensitive football campaign, speed and reliability are paramount.

This is why smart marketers opt for Prebuilt Games. These are white-label solutions that have the coding, physics, and marketing mechanics already built-in—you simply skin them with your brand assets.

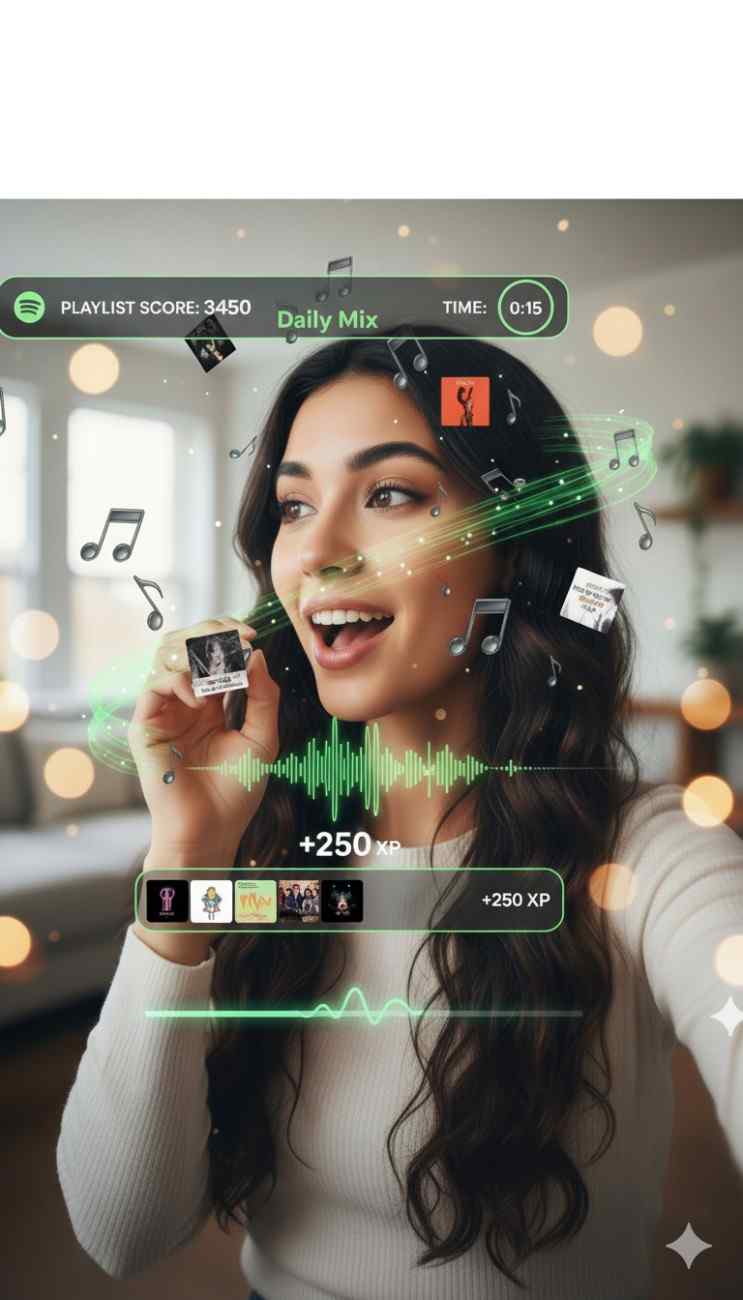

We offer 5 proven game mechanics ready to be deployed for your next campaign:

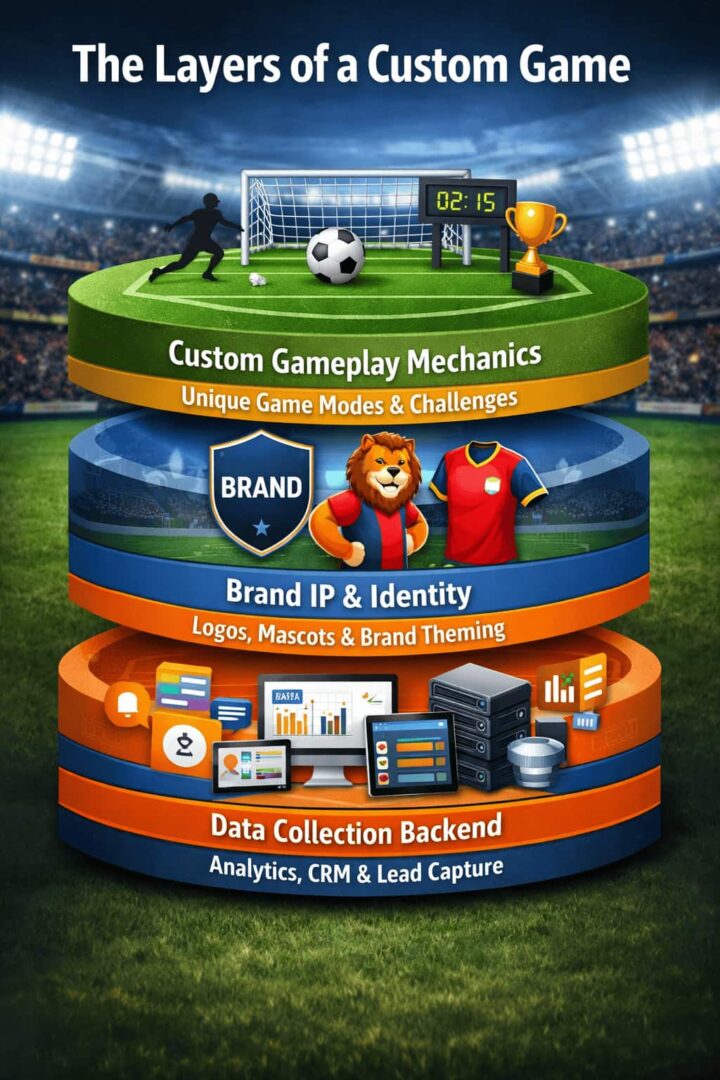

Penalty Shootout: The classic. High tension, high engagement, perfect for mobile.

Predict the Score: A non-skill based prediction game that keeps users coming back before every match.

Football Runner: An endless runner game where users dodge tackles and collect brand logos.

Keepie-Uppie Challenge: A test of timing and skill that is highly addictive.

Football Trivia: Great for educating users about your brand or football history.

Conclusion

A successful football game for marketing isn’t just about graphics; it’s about the psychological journey of the user. By balancing fun with promotional goals, mapping a frictionless user journey, and using smart incentives, you can turn fans into loyal customers.

Don’t let the whistle blow on your campaign before you’ve even started. Leveraging prebuilt game engines allows you to focus on the marketing strategy rather than the code. Ready to kick off? Explore our game library and start planning your victory today.