When I first experienced Virtual Reality (VR), I remember feeling like I had stepped into the future.

It wasn’t just about the game I was playing but more about the way everything came together—hardware, software, and all those unseen pieces working in harmony to transport me into another world.

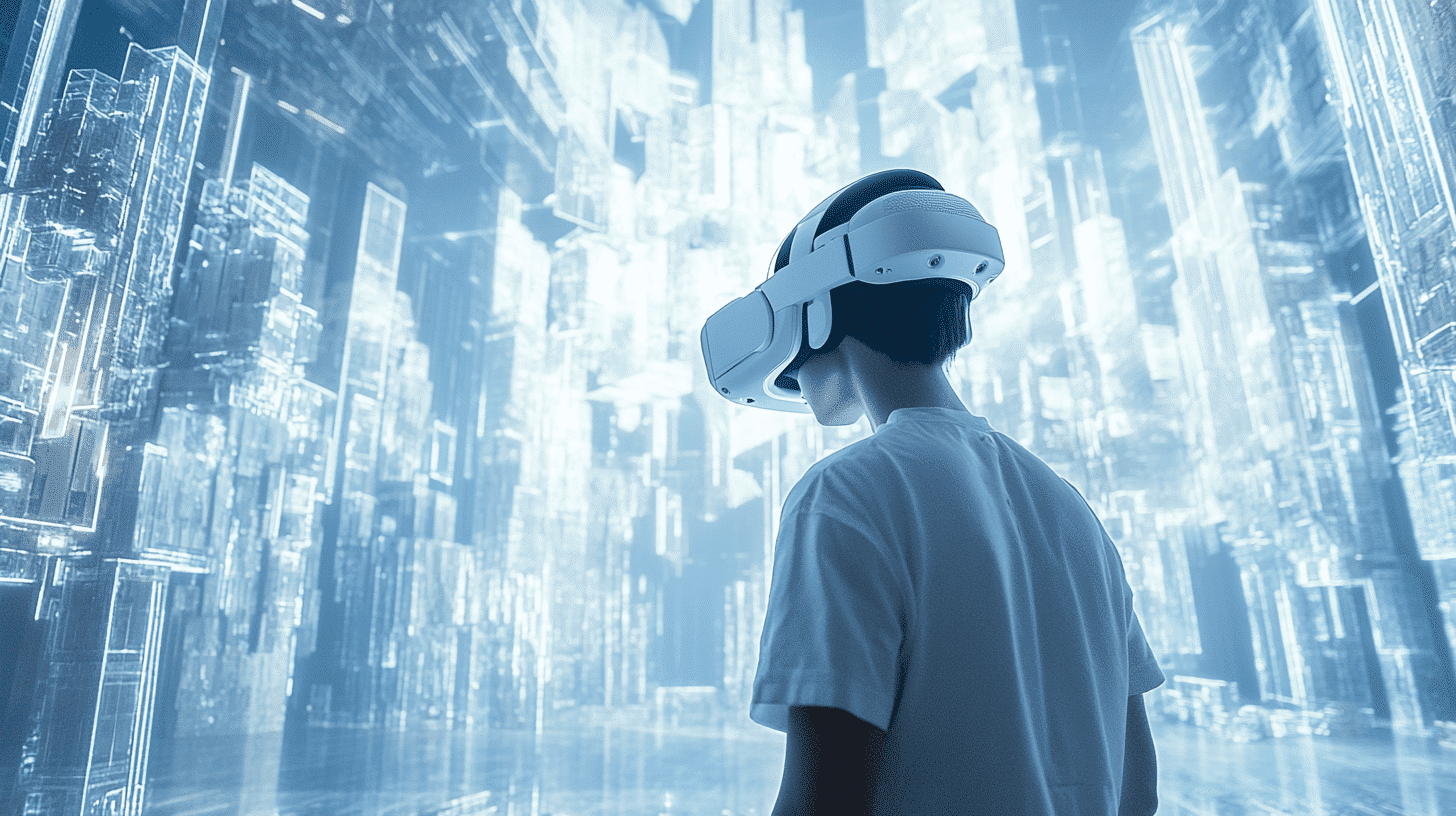

So, when people ask me about the architecture of Virtual Realtiy system, I always think of it as layers—each doing its part to make that experience possible. Let me break it down for you.

Architecture of virtual reality system

1. The Hardware Layer: Your Gateway to Another World

The first thing that hits you in a VR setup is the hardware, right? We’re talking about the headset, controllers, and all those sensors that track every move.

a) VR Head-Mounted Display (HMD):

- Provides stereoscopic 3D rendering of the virtual environment.

- Examples: Oculus Rift, HTC Vive, PlayStation VR.

- Features include head tracking, motion sensors, and display for each eye.

b) Input Devices:

- Handheld Controllers: Devices like joysticks, VR controllers (e.g., Oculus Touch, Vive Controllers) allow users to interact with objects in the virtual world.

- Gloves or Haptic Devices: Provide tactile feedback (haptic feedback) to simulate the sense of touch.

- Body Tracking Sensors: Full-body sensors or suits that track the user’s physical movements and map them to their avatar in the virtual world.

c) Tracking Systems:

- External Sensors (Positional Tracking): Cameras or external base stations to track the user’s movement in the physical space (e.g., HTC Vive Lighthouse sensors).

- Inside-out Tracking: Sensors built into the headset that track the environment without external cameras.

- Eye Tracking: Some VR systems include eye-tracking technology for gaze-based interaction.

d) Computational Power:

- PC or Console: High-performance hardware is required to render VR experiences in real-time, with powerful GPUs (e.g., NVIDIA, AMD) and CPUs to process the VR environment.

- Mobile VR: Lower-end VR experiences can run on mobile devices, using smartphone hardware for rendering (e.g., Google Cardboard, Oculus Go).

2. The Software Layer: Where the Magic Happens

Now, hardware is just one part. All that amazing stuff is useless without the right software to back it up. At the heart of it all is the rendering engine—this is where the VR magic really comes to life.

a) Rendering Engine:

- The core of the VR system, which renders the virtual world in real-time.

- Examples: Unity 3D, Unreal Engine.

- Handles lighting, shadows, textures, and physics calculations to create a realistic 3D environment.

VR SDKs (Software Development Kits):

- Provides libraries and APIs that interface with VR hardware, enabling developers to build VR experiences.

- Examples: Oculus SDK, SteamVR SDK, OpenVR.

c) Graphics API:

- Low-level software that interfaces with the GPU to handle rendering tasks.

- Examples: OpenGL, DirectX, Vulkan.

d) Virtual Environment and Asset Management:

- 3D Models: The objects and environments in the virtual world.

- Textures and Materials: Surface details of virtual objects (e.g., smooth, rough, shiny).

- Audio: 3D spatial sound to enhance immersion.

e) Physics Engine:

- Simulates real-world physics like gravity, collisions, and object interactions.

- Examples: NVIDIA PhysX, Havok Physics.

f) User Interface (UI) and Interaction Management:

- Provides mechanisms for users to interact with virtual objects.

- UI elements like buttons, menus, and other interactive objects are placed within the VR environment.

- Gesture Recognition: System recognizes user gestures and translates them into actions within the VR world (e.g., grabbing or moving an object).

3. Interaction Layer: Bridging Reality and Virtual Reality

When I first started tinkering with VR systems, I quickly realized the most important thing wasn’t just what you saw but how you interacted with it. This is where the interaction layer kicks in.

Imagine this: You reach out with your hand to grab something in the virtual world, and the system has to translate that motion into something the computer understands.

a) Input Processing:

- Hand, body, and controller movements are tracked and mapped into virtual space.

- Gesture and motion recognition algorithms interpret physical actions.

This happens through complex input processing systems that take the signals from your controllers (or gloves) and match them up to virtual movements.

Some systems even use gesture recognition to understand hand motions. If you’ve ever waved at someone in VR or given a thumbs-up to your virtual buddy, gesture recognition was making that possible. And of course, the feedback you get—whether it’s a subtle vibration in your controller or 3D audio that changes depending on where you turn—makes the virtual world feel more real.

b) Feedback Systems:

- Haptic Feedback: Devices like VR gloves or controllers provide tactile responses when the user interacts with virtual objects.

- Audio Feedback: 3D sound that responds to user interaction and environmental cues.

- Visual Feedback: Changes in the virtual environment based on user actions, such as object movements or menu selections.

I always joke that VR is like playing in a movie where you’re the actor, and the world reacts to everything you do.

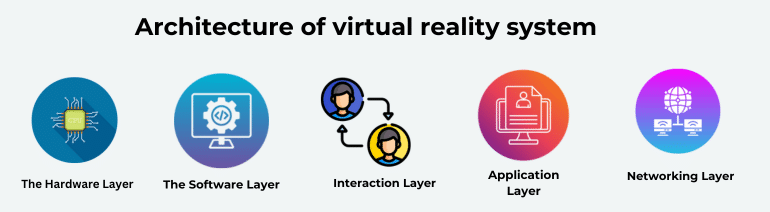

4. Application Layer: The Real Fun Stuff

So, once you’ve got the hardware and software working together, what’s next? It’s all about the applications.

This is what you, as the user, are there for—the VR games, the training simulations, the virtual museums.

For me, the cool part is that VR can be anything, from a simple space shooter game to a complex training simulator for surgeons. Imagine being able to practice heart surgery in VR before ever touching a real patient. That’s not sci-fi anymore—it’s happening.

a) Virtual Reality Applications:

- Games: VR games offer fully immersive experiences.

- Training Simulations: Used in fields like healthcare, engineering, or aviation to provide training without real-world consequences.

- Education: VR classrooms or virtual tours of historical sites.

- Architectural Visualization: Allows architects and clients to walk through virtual buildings.

- Health and Therapy: VR systems designed to reduce anxiety, provide physical rehabilitation, or offer mental health treatment.

b) Content Management System:

- System for managing VR content updates, levels, assets, and versions.

5. Networking Layer: Connecting Virtual Worlds

If you’ve ever played multiplayer VR games, you know how fun (and chaotic) it can be to interact with other real people in the same virtual world.

This is made possible by the networking layer. Multiplayer engines ensure that everyone’s actions are synced in real-time, whether you’re battling aliens together or just hanging out in a virtual lounge.

Without it, you’d see people’s avatars lagging behind their real-world movements, and that’s a total immersion killer.

a) Multiplayer Engine:

- Handles real-time communication between multiple users in the same VR environment.

- Synchronizes user actions, avatar positions, and interactions.

b) Cloud Services:

- Cloud-based storage and computation (e.g., offloading complex rendering or physics calculations).

- Asset streaming for dynamic content updates.

Final Thoughts: Putting It All Together

When you take a step back, the architecture of Virtual Reality system isn’t just about the tech. It’s about how all these layers—hardware, software, interaction, and networking—work together to create something that feels seamless.

I think the real beauty of VR is that, when everything works perfectly, you forget it’s a system at all. You’re just… there, wherever “there” is.

And trust me, once you’re lost in a virtual world, it’s hard to come back to reality without feeling like something magical just happened.